The Pro-Eating-Disorder Internet Is Back

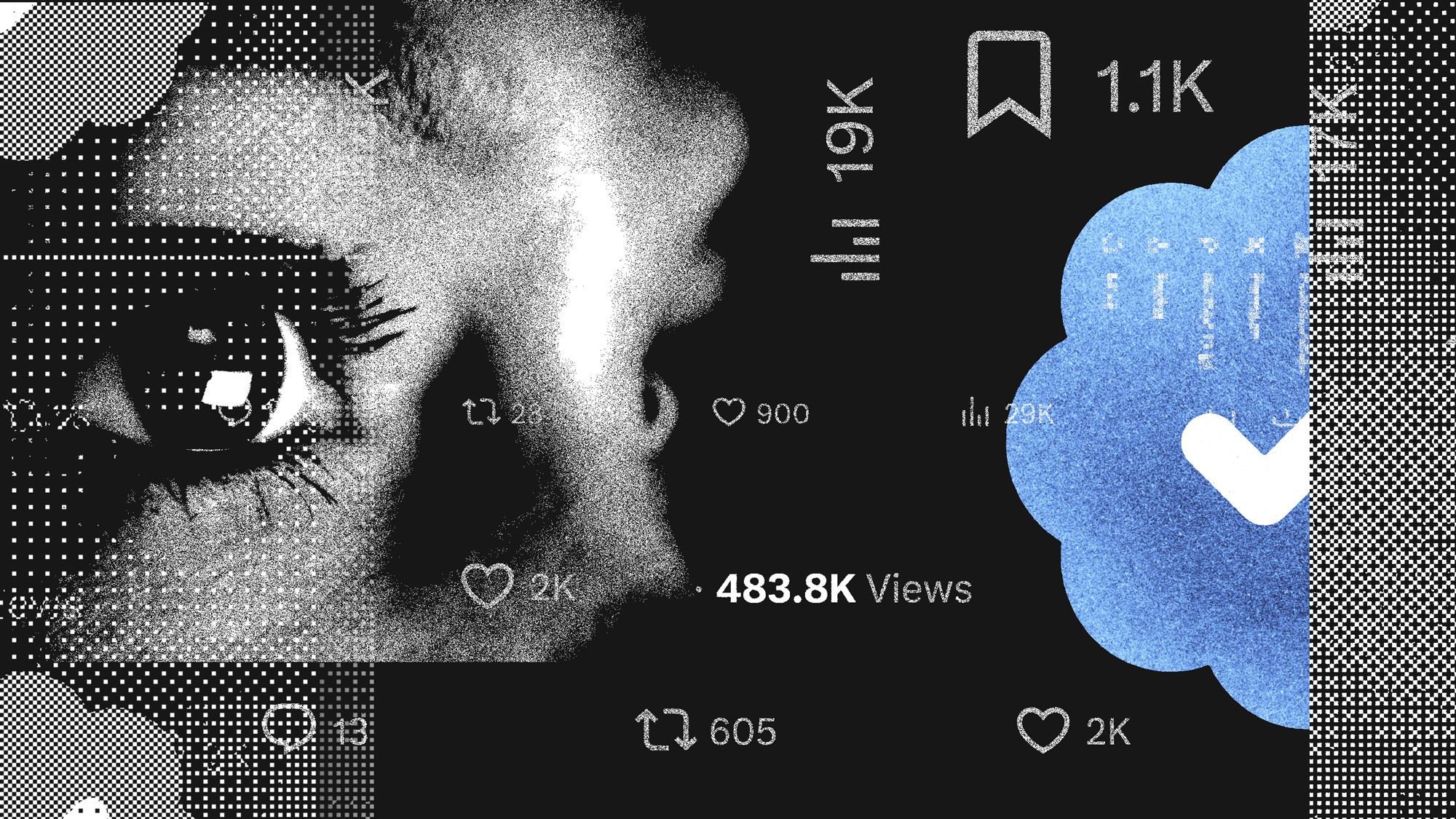

One of the thorniest content-moderation problems grows out of control on X.

The glorification of dangerous thinness is a long-standing problem in American culture, and it is especially bad on the internet, where users can find an unending stream of extreme dieting instructions, “thinspo” photo boards, YouTube videos that claim to offer magical weight-loss spells, and so on. There has always been a huge audience for this type of content, much of which is highly visual and emotionally charged, and spreads easily.

Most of the large social-media platforms have been aware of this reality for years and have undertaken at least basic measures to address it. On most of these platforms, at a minimum, if you search for certain well-known keywords related to eating disorders—as people who are attracted or vulnerable to such content are likely to do—you’ll be met with a pop-up screen asking if you need help and suggesting that you contact a national hotline. On today’s biggest platforms for young people, Instagram and TikTok, that screen is a wall: You can’t tap past it to get to search results. This is not to say that these sites do not host photos and videos glamorizing eating disorders, only that finding them usually isn’t as easy as simply searching.

X, however, offers a totally different experience. If you search for popular tags and terms related to eating disorders, you’ll be shown accounts that have those terms in their usernames and bios. You’ll be shown relevant posts and recommendations for various groups to join under the header “Explore Communities.” The impression communicated by many of these posts, which typically include stylized photography of extremely skinny people, is that an eating disorder is an enviable lifestyle rather than a mental illness and dangerous health condition. The lifestyle is in fact made to seem even more aspirational by the way that some users talk about its growing popularity and their desire to keep “wannarexics”—wannabe anorexics—out of their community. Those who are accepted, though, are made to feel truly accepted: They’re offered advice and positive feedback from the broader group.

Technically, all of this violates X’s published policy against the encouragement of self-harm. But there’s a huge difference between having a policy and enforcing one. X has also allowed plenty of racist and anti-Semitic content under Elon Musk’s reign despite having a policy against “hateful conduct.” The site is demonstrating what can happen when a platform’s rules effectively mean nothing. (X did not respond to emails about this issue.)

This moment did not emerge from a vacuum. The social web is solidly in a regressive moment when it comes to content moderation. Major platforms had been pushed to act on misinformation in response to seismic events including the 2016 presidential election, the coronavirus pandemic, the Black Lives Matter protests of 2020, the rise of QAnon, and the January 6 insurrection, but have largely retreated after backlash from Donald Trump–aligned Republicans who equate moderation with censorship. That equation is one of the reasons Musk bought Twitter in the first place—he viewed it as a powerful platform that was operating with heavy favor toward his enemies and restricting the speech of his friends. After he took over the site, in 2022, he purged thousands of employees and vowed to roll back content-moderation efforts that had been layered onto the platform over the years. “These teams whose full-time job it was to prevent harmful content simply are not really there,” Rumman Chowdhury, a data scientist who formerly led a safety team at pre-Musk Twitter, told me. They were fired or dramatically reduced in size when Musk took over, she said.

[Read: I watched Elon Musk kill Twitter’s culture from the inside]

Now the baby has been thrown out with the bathwater, Vaishnavi J, an expert in youth safety who worked at Twitter and then at Instagram, told me. (I agreed not to publish her full name because she is concerned about targeted harassment; she also publishes research using just her last initial.) “Despite what you might say about Musk,” she told me, “I think if you showed him the kind of content that was being surfaced, I don’t think he would actually want it on the platform.” To that point, in October, NBC News’s Kat Tenbarge reported that X had removed one of its largest pro-eating-disorder groups after she drew the company’s attention to it over the course of her reporting. Yet she also reported that new groups quickly sprang up to replace it, which is plainly true. Just before Thanksgiving, I found (with minimal effort) a pro-eating-disorder group that had nearly 74,000 members; when I looked this week to see whether it was still up, it had grown to more than 88,0000 members. (Musk did not respond to a request for comment.)

That growth tracks with user reports that X is not only hosting eating-disorder content but actively recommending it in the algorithmically generated "For You feed, even if people don’t wish to see it. Researchers are now taking an interest: Kristina Lerman, a professor at the University of Southern California who has published about online eating-disorder content previously, is part of a team finalizing a new paper about the way that pro-anorexia rhetoric circulates on X. “There is this echo chamber, this highly interlinked community,” she told me. It’s also very visible, which is why X is developing a reputation as a place to go to find that kind of content. X communities openly use terms like proana and thinspo, and even bonespo and deathspo, terms that romanticize eating disorders to an extreme degree by alluding fondly to their worst outcomes.

Eating-disorder content has been one of the thorniest content-moderation issues since the beginning of the social web. It was prevalent in early online forums and endemic to Tumblr, which was where it started to take on a distinct visual aesthetic and set of community rituals that have been part of the internet in various forms ever since. (Indeed, it was a known problem on Twitter even before Musk took over the site.) There are many reasons this material presents such a difficult moderation problem. For one thing, as opposed to hate speech or targeted harassment, it is less likely to be flagged by users—participants in the communities are unlikely to report themselves. On the contrary, creators of this content are highly motivated to evade detection and will innovate with coded language to get around new interventions. A platform that really wants to minimize the spread of pro-eating-disorder content has to work hard at it, staying on top of the latest trends in keywords and euphemisms and being constantly on the lookout for subversions of its efforts.

As an additional challenge, the border between content that glorifies eating disorders and content that is simply part of our culture’s fanatical fixation on thinness, masked as “fitness” and “health” advice, is not always clear. This means that moderation has to have a human element and has to be able to process a great deal of nuance—to understand how to approach the problem without causing inadvertent harm. Is it dangerous, for instance, to dismantle someone’s social network overnight when they’re already struggling? Is it productive to allow some discussion of eating disorders if that discussion is about recovery? Or can that be harmful too?

[Read: We have no drugs to treat the deadliest eating disorder]

These questions are subjects of ongoing research and debate; the role that the internet plays in disordered-eating habits has been discussed now for decades. Yet, looking at X in 2024, you wouldn’t know it. After searching just once for the popular term edtwt—“eating disorder Twitter”—and clicking on a few of the suggested communities, I immediately started to see this type of content in the main feed of my X account. Scrolling through my regular mix of news and jokes, I would be served posts like “a mega thread of my favourite thinsp0 for edtwt” and “what’s the worst part about being fat? … A thread for edtwt to motivate you.”

I found this shocking mostly because it was so simplistic. We hear all the time about how complex the recommendation algorithms are for today’s social platforms, but all I had done was search for something once and click around for five minutes. It was oddly one-to-one. But when I told Vaishnavi about this experience, she wasn’t surprised. “Recommendation algorithms highly value engagement, and ED content is very popular,” she told me. If I had searched for something less popular, which the site was less readily able to provide, I might not have seen a change in my feed.

When I spoke with Amanda Greene, who published extensively about online eating-disorder content as a researcher at the University of Michigan, she emphasized the big, newer problem of recommendation algorithms. “That’s what made TikTok notorious, and that’s what I think is making eating-disorder content spread so widely on X,” she said. “It’s one thing to have this stuff out there if you really, really search for it. It’s another to have it be pushed on people.”

It was also noticeable how starkly cruel much of the X content was. To me, it read like an older style of pro-eating-disorder content. It wasn’t just romanticization of super thinness; it looked like the stuff you would see 10 years ago, when it was much more common for people to post photos of themselves on social media and ask for others to tear them apart. On X, I was seeing people say horrible things to one another in the name of “meanspo” (“mean inspiration”) that would encourage them not to eat.

Though she wasn’t collecting data on X at the moment, Greene said that what she’d been hearing about anecdotally was similar to what I was being served in my X feed. Vicious language in the name of “tough love” or “support” was huge in years past and is now making its way back. “I think maybe part of the reason it had gone out was content moderation,” Greene told me. Now it’s back, and everybody knows where to find it.